Goals and Challenges of Global AI Governance and Chinese Solutions

作者: Lu Chuanying

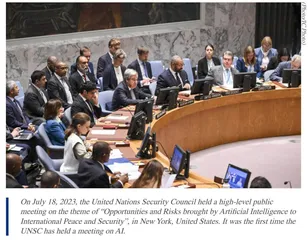

In July 2023, United Nations (UN) Secretary-General Antonio Guterres called for the establishment of an entity agency responsible for artificial intelligence (AI) security governance, and organized an “AI High-level Advisory Committee” to explore pertinent issues, opening the prelude to global AI governance. In October 2023, Chinese President Xi Jinping put forward the Global AI Governance Initiative in his keynote address at the opening ceremony of the Third Belt and Road Forum for International Cooperation, elaborating China’s policy positions on AI governance to the international community.

Open a New Chapter in Global AI Governance

Global AI governance refers to the process in which governments, markets, technology communities and other actors jointly formulate and implement a series of principles, norms and institutions for the safe development and peaceful use of AI in the world. As a strategic technology, AI has the potentials to change the global pattern and the process of human development. Among them, the development and application of AI technology involves a wide spectrum of actors and industries far beyond conventional technology, being the most complex and the most influential strategic technology. Because AI involves a wide range of topics and many actors, the lack of rules and order would make many differences between various parties in the field of ethics, norms and security difficult to solve and lead to more conflicts.

First, security development and peaceful use has become the new order for global AI governance. As far as the technology itself is concerned, the models, algorithms and data that constitute AI have great security risks, and there are the risks of “black box operation” and “losing control”. On the one hand, the security risks of AI come from the “black box” of algorithms themselves, where developers lack the understanding of AI decision-making mechanism. On the other hand, man has less than sufficient understanding of AI’s ability, resulting in the risks of abuse. If not prevented, any error could be catastrophic. Therefore, how to ensure the safe use of AI is of utmost importance, and the premise of developing any AI technology should also be security. The international community should reach a consensus on this and jointly promote the safe development of AI.

From the perspective of technology application, AI has huge development potentials in military and intelligence field, but it also carries great potential risks. If AI is abused on the battlefield, it could cause irreparable damage. In addition, AI, as a strategic technology, has become the new strategic commanding heights for the world’s major military powers to strive for. Countries have invested heavily in AI armaments for intelligence, surveillance and reconnaissance (ISR), to ensure cybersecurity, command and control of various semi-automatic and autonomous vehicles, and improve daily work efficiency, including logistics, recruitment, performance and maintenance. Taking the peaceful use of AI as a premise can effectively avoid its excessive militarization, and control the AI driven arms race.

Furthermore, construction of mechanism complex has become the path for realizing global AI governance. Global AI governance mechanisms are crucial to managing differences, seeking consensus, and achieving governance goals. Different from the principled order, the specifically designed governance mechanisms based on solving a certain kind of problems are more specific and more binding. As a general technology, AI involves a wide range of fields for governance, which require a series of loosely coupled mechanisms to jointly form a complex of global governance mechanisms. Each governance mechanism has a clear topic, participating actors, and interaction model. There are more than 50 global AI governance initiatives, norms and mechanisms, their governance practices involving multiple levels such as universality of morality and ethics, rules of national behaviors, technical standards, industry application practices, and covering levels of participation like international organizations, governments, the private sector, technology communities, citizen groups and other participating entities.

From the perspective of issues, the current AI governance mainly focuses on three areas: ethics, norms and security. AI development and AI governance always go hand in hand. AI governance is a response to problems and challenges brought about by technology and application. With technological progress and application breakthroughs, the issues of AI governance are constantly expanding and its capacities should be continuously improved. Early AI governance focused on ethical issues, mainly the relationship between machines and people, and put forward the concept of maintaining human control over machines. With the breakthroughs of military AI applications such as drones, norms have become the focus of governance. Responsible use of military AI involves not only how to implement existing international norms in the field of military AI, but also how to construct new norms to further restrain the behaviors of irresponsible use of military AI. With the breakthroughs of Large Language Model (LLM), security governance for models, algorithms and data has become a new major issue.

Although these different governance mechanisms have different goals, given the strategic and overall nature of AI, fragmented governance model may bring more conflicts, which is not conducive to solving problems. Coordinating the relationship between different mechanisms helps actors make relatively rational and comprehensive decisions in the process of participating in global AI governance. In addition, AI governance mechanisms are more interconnected than in other governance areas. As AI technology plays an important role on different issues, it runs through the global AI governance mechanism complex as a main line, and is embodied in correlation between different issues and interaction models between actors. This makes it necessary for AI governance concepts and models to be adjusted accordingly.